Recently, the big data research team has achieved a series of progress in the research of artificial intelligence and big data processing. The research results have been published in three top journals of artificial intelligence, including IEEE Transactions on Neural Networks and Learning Systems, IEEE Transactions on Cybernetics, and Information Sciences. Neural network is one of the most popular research topics in artificial intelligence and is also the foundation of deep learning. Although deep learning has developed rapidly in recent years, however, the issue of how to design the optimal architecture of a neural network is still to be further explored. In this situation, the team designed a series of models on network structure optimization, feature selection, and recurrent neural network for time series, which have effectively improved the weakness and enhanced the learning performance of artificial intelligence models.

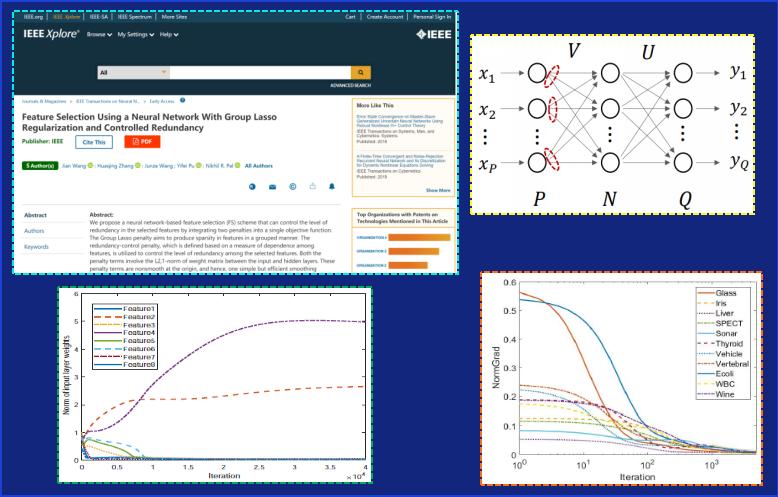

Fig. 1 Feature Selection using a Neural Network With Group Lasso Regularization and Controlled Redundancy

The research paper titled “Feature Selection using a Neural Network With Group Lasso Regularization and Controlled Redundancy” was published in IEEE Transactions on Neural Networks and Learning Systems (IF: 11.683), an authoritative international journal in artificial intelligence. Associate Prof. Wang Jian and Ph.D student Zhang Huaqing are the co-first authors. Prof. Nikhil R. Pal (Indian Statistical Institute), the Honorary Professor of UPC, is participated in the guidance. This work is supported by the National Natural Science Foundation of China, the Natural Science Foundation of Shandong Province, the Fundamental Research Funds for the Central Universities, the Science and Technology Support Plan for Youth Innovation of University in Shandong Province, and the Major Scientific and Technological Projects of CNPC.

Feature selection, also known as attribute selection, refers to the process of selecting the most informative features or attributes from the original features or attributes to reduce the dimensionality of data. It is a critical step in artificial intelligence, and also an important procedure in big data processing. In this work, the Group Lasso and dependency penalties were integrated into neural networks to build an embedded model. The model can successfully select the most useful features for a given task meanwhile control the redundancy of the selected features. As a result, the proposed model achieved an optimal effect of dimensionality reduction and demonstrated a satisfactory generalization ability.

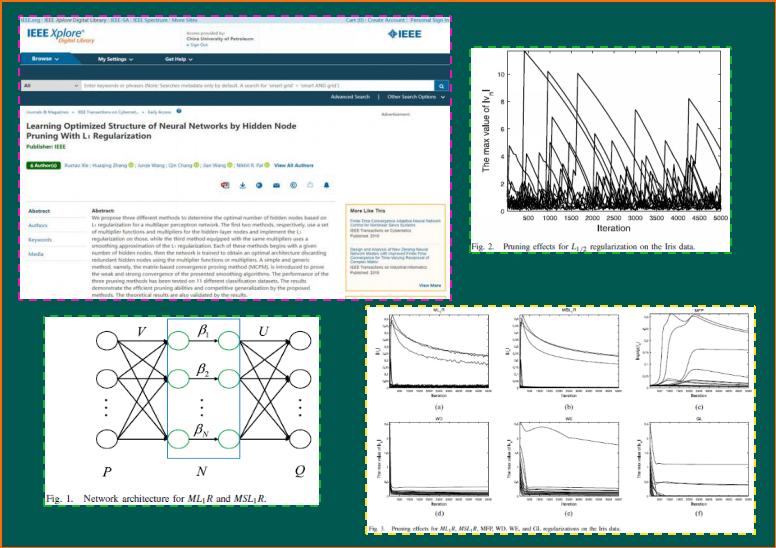

Fig. 2 Learning Optimized Structure of Neural Networks by Hidden Node Pruning With L1 Regularization

The research paper titled “Learning Optimized Structure of Neural Networks by Hidden Node Pruning With L1 Regularization” was published in the prestigious international journal IEEE Transactions on Cybernetics (T0 Journal , IF: 10.387). Master student Xie Xuetao and PH.D student Zhang Huaqing are the co-first authors. Associate Prof. Wang Jian is the corresponding author and Prof. Nikhil R. Pal (Indian Statistical Institute, Member of the Indian National Science Academy, IEEE Fellow, Academician) who is the honorary professor of UPC, is participated in the guidance. The research is supported by the National Natural Science Foundation of China, the Natural Science Foundation of Shandong Province and the Fundamental Research Fund for Central Universities.

Based on the sparsity of the L1 regular, this work proposed two learning models of structure optimization for neural networks. Besides, an outstanding contribution is that a simple but general proving method for convergence was presented in this work. The empirical results demonstrated the strong robustness, satisfactory pruning ability, and pleasing generalization of the proposed models. In particular, they are suitable for the high-dimensional data. This research is beneficial to construct the simplest structure of the models in artificial intelligence and deep learning.

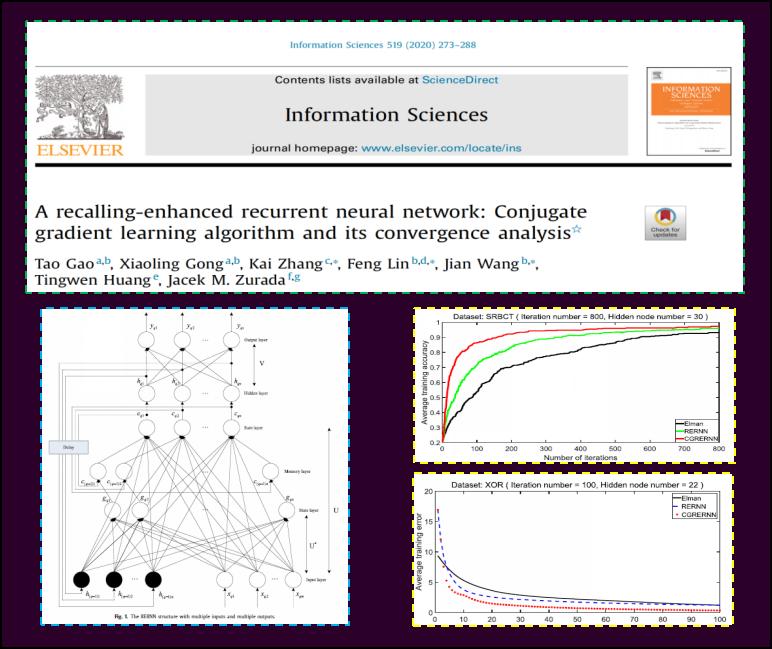

Fig. 3 A recalling-enhanced recurrent neural network: Conjugate gradient learning algorithm and its convergence analysis

The research paper entitled “A recalling-enhanced recurrent neural network: Conjugate gradient learning algorithm and its convergence analysis” was published in Information Sciences , a top journal in the field of artificial intelligence. Gao Tao, Ph.D. student, is the first author of this paper. Associate Prof. Wang Jian and Prof. Zhang Kai are the corresponding authors. Academician Jacek M. Zurada, a distinguished professor and part-time doctoral supervisor of UPC, is participated in the guidance. This work is supported by the National Natural Science Foundation of China, the Natural Science Foundation of Shandong Province,the Fundamental Research Funds for the Central Universities, the National Science and Technology Major Project of China under Grant, the Major Scientific and Technological Projects of CNPC under Grant and the Science and Technology Support Plan for Youth Innovation of University in Shandong Province. Recurrent neural network owns natural advantages in solving the time series problems in artificial intelligence due to its special structure. In order to improve the performance of the algorithm, in this paper, an adaptive conjugate gradient method was constructed to optimize the structural parameters. The effectiveness of the proposed model was verified in theory and practice.

The big data research team led by Associate Prof. Wang Jian is mainly engaged in the research of neural network model design, theoretical analysis of neural network model, feature selection, fractional neural network and big data processing of Petroleum Engineering in the field of artificial intelligence. In recent years, it has been supported by the National Natural Science Foundation of China, the National Natural Science Foundation Youth Project, the Shandong Key Research and Development Project, the Shandong Agricultural Department's Major Extension Project, the Shandong Natural Science Foundation, the International Cooperation and Exchange Fund and more than 20 other key research and academic projects. The members have published more than 30 academic papers on prestigious international journals such as IEEE Transactions on Neural Networks and Learning Systems, IEEE Transactions on Cybernetics, Neural Networks, Information Sciences, IEEE Transactions on Knowledge and Data Engineering, Journal of Petroleum Science and Engineering and Journal of Natural Gas Science and Engineering. So far, the members have published 1 book and more than 130 academic papers and formed certain international influences on the field of artificial intelligence.

Related links for papers:

1. Feature Selection using a Neural Network With Group Lasso Regularization and Controlled Redundancy: https://ieeexplore.ieee.org/document/9091195

2. Learning Optimized Structure of Neural Networks by Hidden Node Pruning With L1 Regularization: https://ieeexplore.ieee.org/document/8911376

3. A recalling-enhanced recurrent neural network: Conjugate gradient learning algorithm and its convergence analysis: https://www.sciencedirect.com/science/article/pii/S0020025520300566

Updated: 2020-05-20